Design issues in the new year

A reflection of 2024 and a look forward of 2025

We began the new year with two new Design Issue blog posts by Sir Tim Berners-Lee, co-I of the EWADA project.

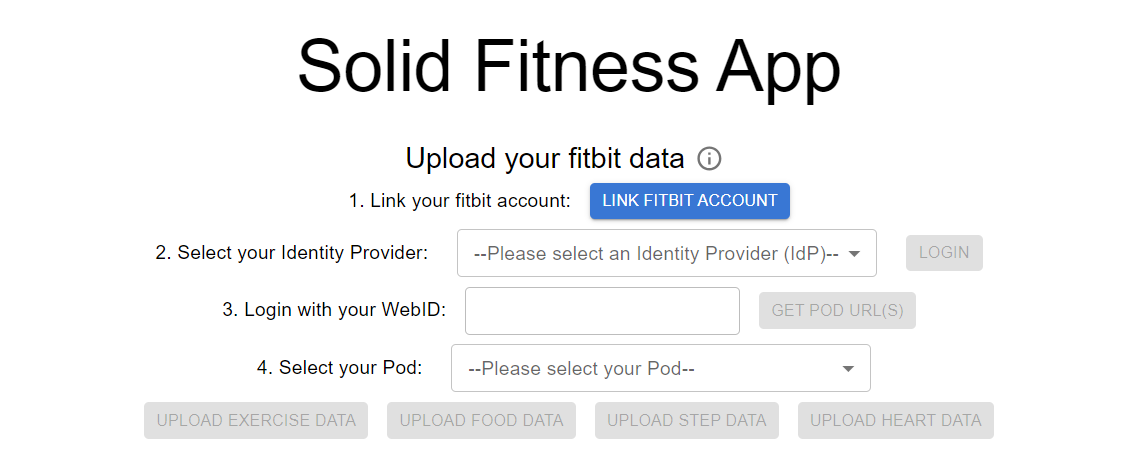

In these posts Sir Tim Berners-Lee outlines a transformative future for the World Wide Web, emphasising decentralisation, user empowerment, and enhanced interoperability. Central to this vision is the Solid protocol —- a decentralised system allowing individuals to own and control their personal data through ``pods’’, facilitating seamless interactions across diverse applications and platforms.

Drawing parallels to Metcalfe’s Law, which states that the value of a network increases with the square of its users, Solid envisions a web where the interconnectedness of applications amplifies their utility. As more applications integrate with Solid pods, the collective value and functionality of the web grow exponentially, benefiting users and developers alike.

Solid encourages the development of ``no-code’’ applications, allowing users to create and customise tools without extensive programming knowledge. This democratisation of app development fosters innovation and enables communities to build solutions tailored to their specific needs, promoting a more inclusive and participatory digital ecosystem.

The adoption of Solid is poised to drive economic growth by enhancing productivity and collaboration. However, the primary goal remains to empower individuals and communities, enabling them to address global challenges through collective action and shared resources. This paradigm shift emphasises the importance of creativity, compassion, and cooperation in shaping the future of the web.

Sir Tim’s vision presents a compelling roadmap for a decentralised web that prioritises user control, interoperability, and community-driven innovation. By embracing the principles of Solid, the web can evolve into a more equitable and interconnected space, unlocking new possibilities for individuals and society as a whole.

For a deeper exploration of this vision, you can read the full articles here: